Nudify AI: Understanding the Technology, Risks, and Protection Strategies

Artificial intelligence has transformed many aspects of our digital lives, but not all applications are created with positive intentions. Nudify AI technology, which uses artificial intelligence to create fake nude images of people without their consent, has become increasingly prevalent online. This article explores what Nudify AI is, the serious ethical and legal concerns it raises, and most importantly, how you can protect yourself and others from this form of digital abuse.

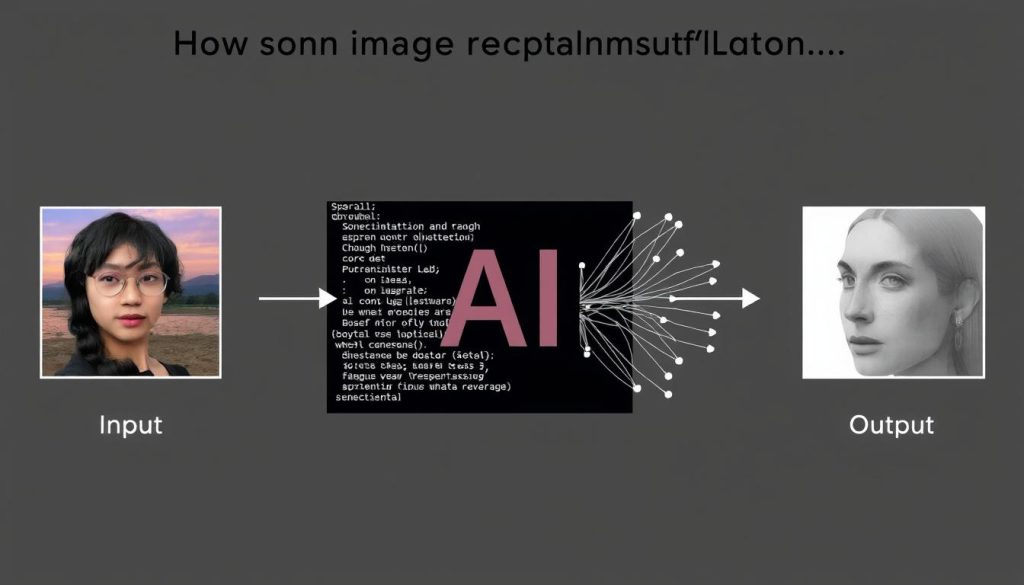

What Is Nudify AI and How Does It Work?

Nudify AI refers to applications and websites that use artificial intelligence algorithms to digitally remove or alter clothing in photographs, creating fake nude images of real people. These tools have proliferated across the internet in recent years, with some services receiving millions of visits monthly. The technology behind these apps typically uses advanced AI models called “diffusion models” that can generate highly realistic fake images.

How These Applications Operate

Most Nudify AI services follow a similar pattern:

- Users upload photos of fully-clothed individuals

- AI algorithms process these images to create fake nude versions

- The resulting images are generated without the knowledge or consent of the person in the original photo

- Many services charge fees ranging from $2-40 per image or offer subscription models

According to research by Graphika, a social network analysis company, in September alone, 24 million people visited websites offering these services. The rise in popularity corresponds with the release of several open-source AI models that can create increasingly realistic images.

Stay Informed About Digital Safety

Get the latest updates on protecting yourself and loved ones from online image abuse.

Ethical and Legal Concerns Surrounding Nudify AI

The proliferation of Nudify AI technology raises serious ethical and legal concerns. These applications essentially create non-consensual intimate imagery, which can cause significant emotional harm to victims. The creation and distribution of such content without consent is a form of image-based sexual abuse.

Emotional Impact on Victims

Victims of Nudify AI abuse often experience severe emotional distress. As reported by CNBC, victims describe feelings of violation, anxiety, and loss of control over their own identity. One victim explained that even hearing a camera click could trigger panic attacks after discovering manipulated images of herself online.

“It makes you feel like you don’t own your own body, that you’ll never be able to take back your own identity,” said Mary Anne Franks, professor at George Washington University Law School and president of the Cyber Civil Rights Initiative.

Legal Status and Challenges

The legal framework surrounding Nudify AI varies globally and is still evolving:

- In the United States, there is currently no federal law specifically banning the creation of deepfake pornography of adults

- Creating such content featuring minors is illegal under child sexual abuse material laws

- Some states have begun introducing legislation to address this issue

- Many victims find limited legal recourse when their images are manipulated

In November, a North Carolina child psychiatrist was sentenced to 40 years in prison for using undressing apps on photos of his patients, marking the first prosecution under laws banning deepfake generation of child sexual abuse material.

How Tech Companies Are Responding to Nudify AI

Major technology companies have begun taking action against Nudify AI services, though critics argue these efforts are often reactive rather than proactive. Here’s how different companies are addressing the issue:

Meta's Legal Action and Policy Changes

Meta (parent company of Facebook and Instagram) has taken several steps to combat Nudify AI:

- Filed a lawsuit against Joy Timeline HK Limited, the entity behind CrushAI apps

- Updated policies to explicitly prohibit the promotion of “nudify” apps

- Developed new technology to detect ads for these services

- Shared information about violating websites with other tech companies

- Blocked links to these websites from their platforms

“This legal action underscores both the seriousness with which we take this abuse and our commitment to doing all we can to protect our community from it,” Meta stated in a blog post.

Other Platform Responses

Search and App Platforms

- Google has removed ads that violate their policies against sexually explicit content

- Apple and Google face criticism for allowing some of these apps in their stores

- TikTok has blocked the keyword “undress” in searches

Authentication Services

- Discord, Apple, and other companies have terminated developer accounts linked to these services

- Payment processors like Mastercard have policies against processing payments for non-consensual content

Report Harmful Content

If you’ve discovered non-consensual intimate images online, you can report them to platforms and authorities.

How to Protect Yourself from Nudify AI Abuse

While the responsibility for preventing abuse lies with those creating and distributing non-consensual images, there are steps you can take to better protect yourself online:

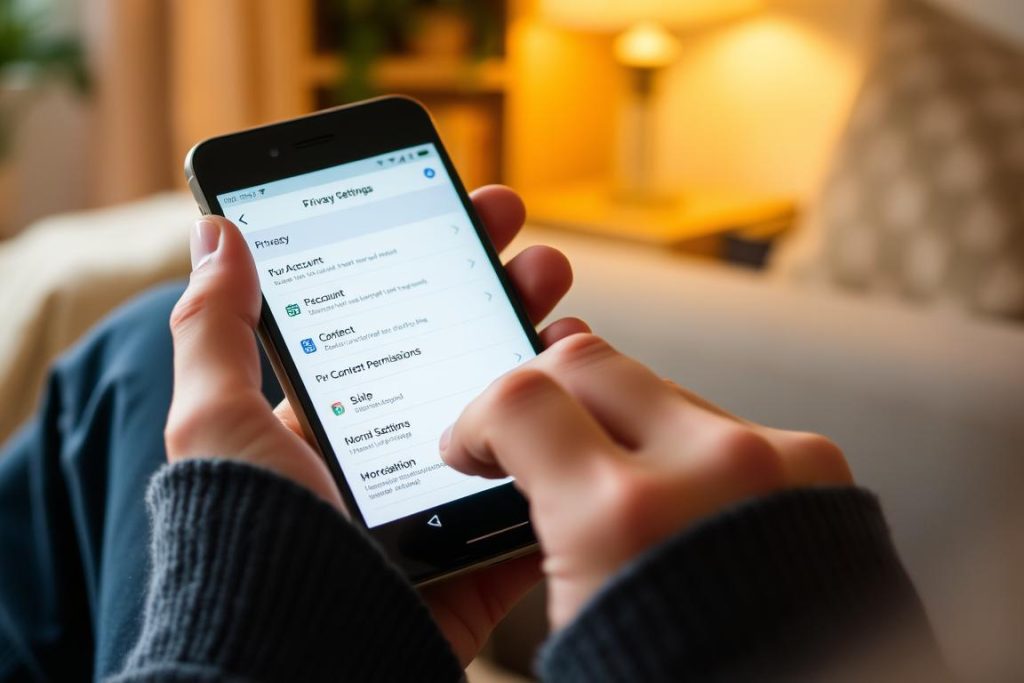

Social Media Privacy Settings

Reviewing and strengthening your social media privacy is an important first step:

- Set accounts to private where possible

- Regularly audit who can see your photos

- Be selective about which images you share publicly

- Consider watermarking important photos

- Disable facial recognition features when available

Digital Footprint Management

Managing your overall digital presence can reduce risk:

- Regularly search your name and images to monitor where they appear

- Use reverse image search tools to find where your photos are being used

- Request removal of your images from sites where they’re used without permission

- Consider using tools like StopNCII.org that help prevent the spread of non-consensual intimate images

“We must be clear that this is not innovation, this is sexual abuse. These websites are engaged in horrific exploitation of women and girls around the globe.”

Resources for Victims

If you discover your images have been manipulated without consent:

Immediate Actions

- Document everything with screenshots (including URLs and dates)

- Report content to the hosting platform

- Contact website administrators for removal

Support Resources

- Cyber Civil Rights Initiative hotline: 844-878-2274

- Without My Consent (legal resources)

- Local law enforcement (especially if minors are involved)

Protect Your Digital Identity

Download our comprehensive guide to securing your online presence and images.

Legislative Efforts to Combat Nudify AI

As technology evolves faster than legislation, lawmakers are working to address the gaps in legal protection against Nudify AI abuse:

Current and Proposed Legislation

Several legislative efforts are underway:

- The TAKE IT DOWN Act in the U.S. – a bipartisan effort to fight image-based abuse across the internet

- State-level bills like the one proposed in Minnesota that would fine companies offering nudify services

- In England, the children’s commission has called for legislation to ban these apps altogether

- Legislation supporting parental oversight of teen app downloads

These efforts face challenges, including balancing regulation with innovation and addressing the global nature of the internet. Many advocates argue that comprehensive federal legislation is needed to effectively address the issue.

Reporting Mechanisms

Several reporting mechanisms have been developed to help victims:

- StopNCII.org – a platform that helps prevent the sharing of non-consensual intimate images

- NCMEC’s Take It Down – a service specifically designed to help remove explicit images of minors

- Platform-specific reporting tools on major social media sites

- Law enforcement reporting channels for cases involving minors

Frequently Asked Questions About Nudify AI

Is using Nudify AI technology legal?

The legality varies by jurisdiction. Creating fake nude images of adults without consent exists in a legal gray area in many places, though this is changing as new legislation is introduced. Creating such images of minors is illegal in most countries and classified as child sexual abuse material. Even when not explicitly illegal, using such technology can potentially violate various laws related to harassment, defamation, and privacy.

How can I tell if an image has been manipulated by AI?

While AI-generated images are becoming increasingly realistic, they often contain subtle inconsistencies. Look for unusual textures, unnatural body proportions, strange artifacts in the image, or inconsistent lighting and shadows. Some tools are being developed to detect AI-manipulated images, though this remains challenging as the technology improves.

What should I do if I find my images have been manipulated?

If you discover manipulated images of yourself online: 1) Document everything with screenshots, including URLs and dates, 2) Report the content to the hosting platform, 3) Contact website administrators requesting removal, 4) Consider consulting with a lawyer about potential legal actions, 5) Reach out to support organizations like the Cyber Civil Rights Initiative for guidance, and 6) Report to law enforcement if the content involves minors.

How are tech companies addressing Nudify AI abuse?

Tech companies are taking various approaches, including: removing ads promoting these services, blocking keywords related to “nudify” apps, terminating developer accounts linked to these services, sharing information about violating websites with other companies, taking legal action against developers of these apps, and developing technology to detect and remove such content.

Can Nudify AI apps really create fake images of anyone?

Unfortunately, current AI technology makes it possible to create fake nude images of virtually anyone from regular photographs. The quality and realism vary, but the technology continues to improve. This is why awareness, protective measures, and legislative action are so important.

Are there legitimate uses for AI image manipulation technology?

Yes, AI image manipulation has many legitimate and creative applications in fields like art, film, fashion, and medicine. The ethical concerns arise when this technology is used without consent to create fake intimate images of real people. The technology itself is neutral; the ethical issues lie in how people choose to use it.

How can parents protect their children from Nudify AI technology?

Parents can take several steps: educate children about online privacy and the risks of sharing photos, monitor their social media accounts and privacy settings, use parental controls to restrict app downloads, have open conversations about digital citizenship and consent, and know how to report inappropriate content if discovered.

What psychological impact can Nudify AI abuse have on victims?

Victims often experience significant psychological distress, including feelings of violation, anxiety, depression, and loss of control. Some report symptoms similar to those of other forms of sexual abuse, including panic attacks, difficulty trusting others, and fear in social situations. Professional support from counselors familiar with digital abuse can be helpful for those affected.

Protecting Digital Dignity in the Age of AI

As artificial intelligence technology continues to advance, the challenges posed by applications like Nudify AI highlight the urgent need for a multi-faceted approach to protection. Technology companies, legislators, and individuals all have important roles to play in preventing the misuse of these tools.

By staying informed about the risks, implementing strong privacy practices, supporting appropriate legislation, and knowing how to respond if victimized, we can collectively work toward a digital environment that respects human dignity and consent. The fight against non-consensual intimate imagery requires ongoing vigilance, but with coordinated efforts, we can create stronger protections for everyone online.

Join the Movement for Digital Safety

Help create a safer online environment by supporting organizations working to combat image-based abuse.